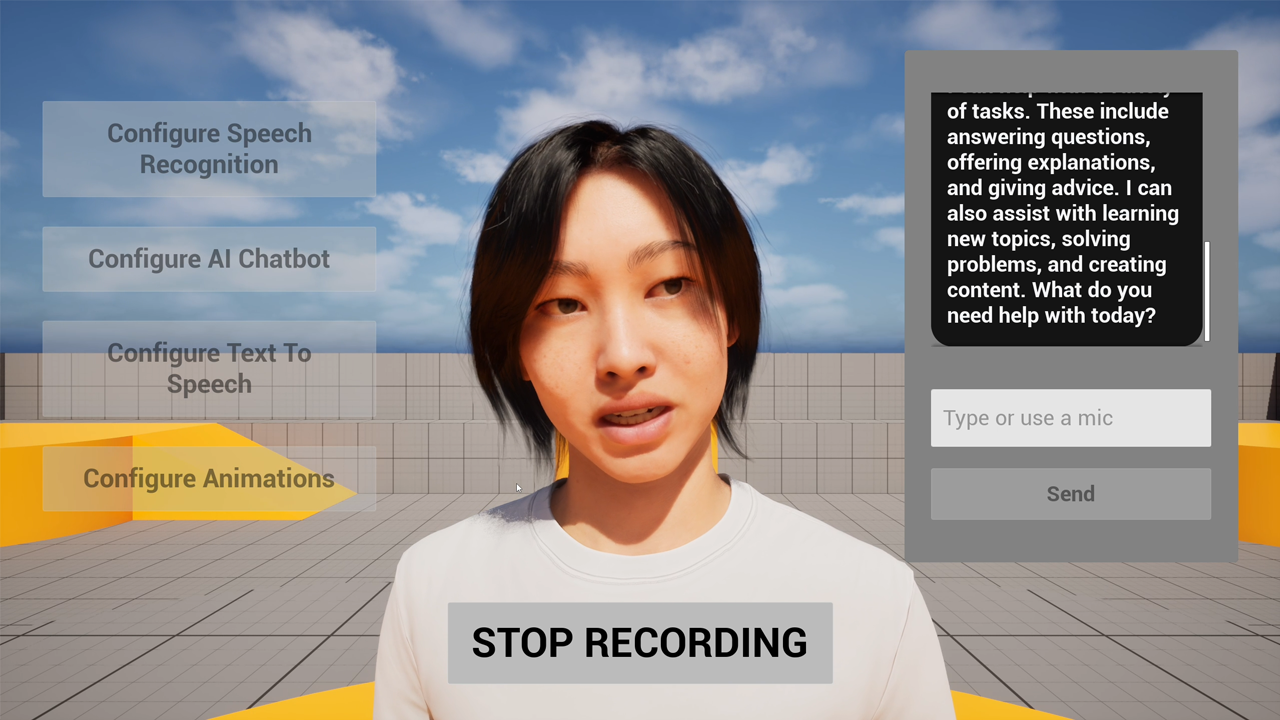

Building a Complete Conversational MetaHuman Speech-to-Speech AI System with Lip Sync in Unreal Engine

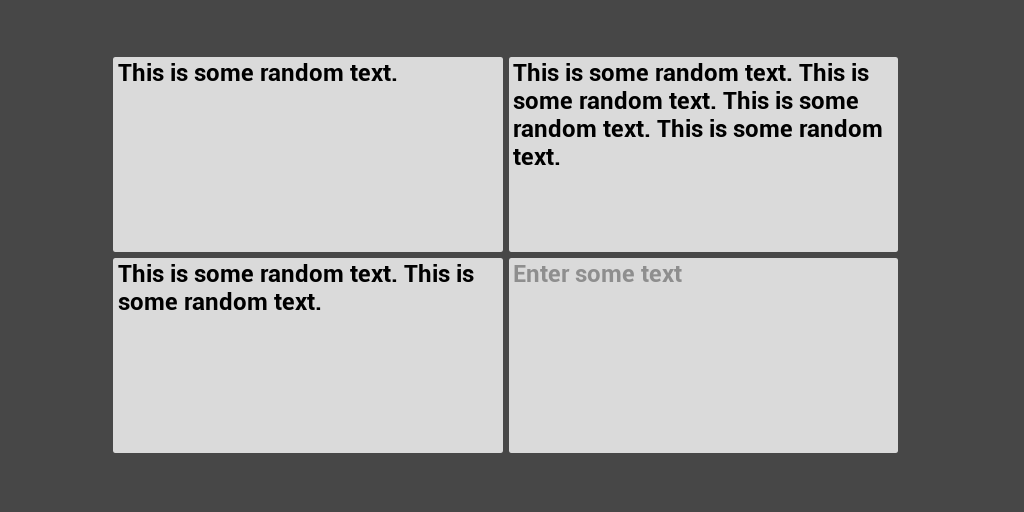

I recently put together a demo project that shows how to create fully interactive AI NPCs in Unreal Engine using speech recognition, AI chatbots, text-to-speech, and realistic lip synchronization. The entire system is built with Blueprints and works across Windows, Linux, Mac, iOS, and Android. If you’ve been exploring AI NPC solutions like ConvAI or Charisma.ai, you’ve probably noticed the tradeoffs: metered API costs that scale with your player count, latency from network roundtrips, and dependency on cloud infrastructure. This modular approach gives you more control, run components locally or pick your own cloud providers, avoid per-conversation billing, and keep your players interactions private if needed. You own the pipeline, so you can optimize for what actually matters to your game. Plus, with local inference and direct audio-based lip sync, you can achieve lower latency and more realistic facial animation, check the demo video below to see the difference yourself. ...